A practical use for object detection based on Convolutional Neural Networks is in devices which can support people with impaired vision. An embedded device which runs object-detection models can make everyday life easier for users with such a disability, for example by detecting any nearby obstructions.

Embedded Technology Enablers

However, so far we have only seen a limited use of embedded devices or “wearable” devices to deploy AI in order to support users directly. This is largely due to the resource limitations of embedded systems, the most significant of which are computing power and energy consumption.

Steady progress continues to be made in embedded device technology, especially in the most important element, which is miniaturisation. The current state-of-the-art is a three- nanometer process for MOSFET (metal oxide semiconductor field effect transistor) devices. Such smaller devices allow for shorter signal propagation time, and therefore higher clock frequencies. The development of multi-core devices allows concurrent processing, which means that applications can run more quickly. The energy efficiency of devices has increased and substantial improvements have been made in the energy density of modern Li-Ion and Li-Polymer batteries. Combining all these factors together makes it now feasible to run computationally intensive tasks, such as machine learning model inference on modern embedded hardware.

As a result, AI-based embedded technology is now widely used to process, predict and visualise medical data in real time. An increasing number of devices have been FDA-approved. However, many more applications are not on the FDA regulatory pathway, including AI applications that aid operational efficiency or provide patients with some form of support. Several thousands of such devices are in use today.

Support for the Visually Impaired

Digica has developed an AI-based object-detection system which runs on a portable embedded device and is intended to assist the blind and partially sighted. The embedded device is integrated with a depth-reading camera which is mounted on the user’s body.

The system detects obstacles using the depth camera and relays information to the user by a haptic (vibration) controller and a Bluetooth earpiece. For the initial prototype, we selected a Raspberry Pi 4 as the chosen embedded device.

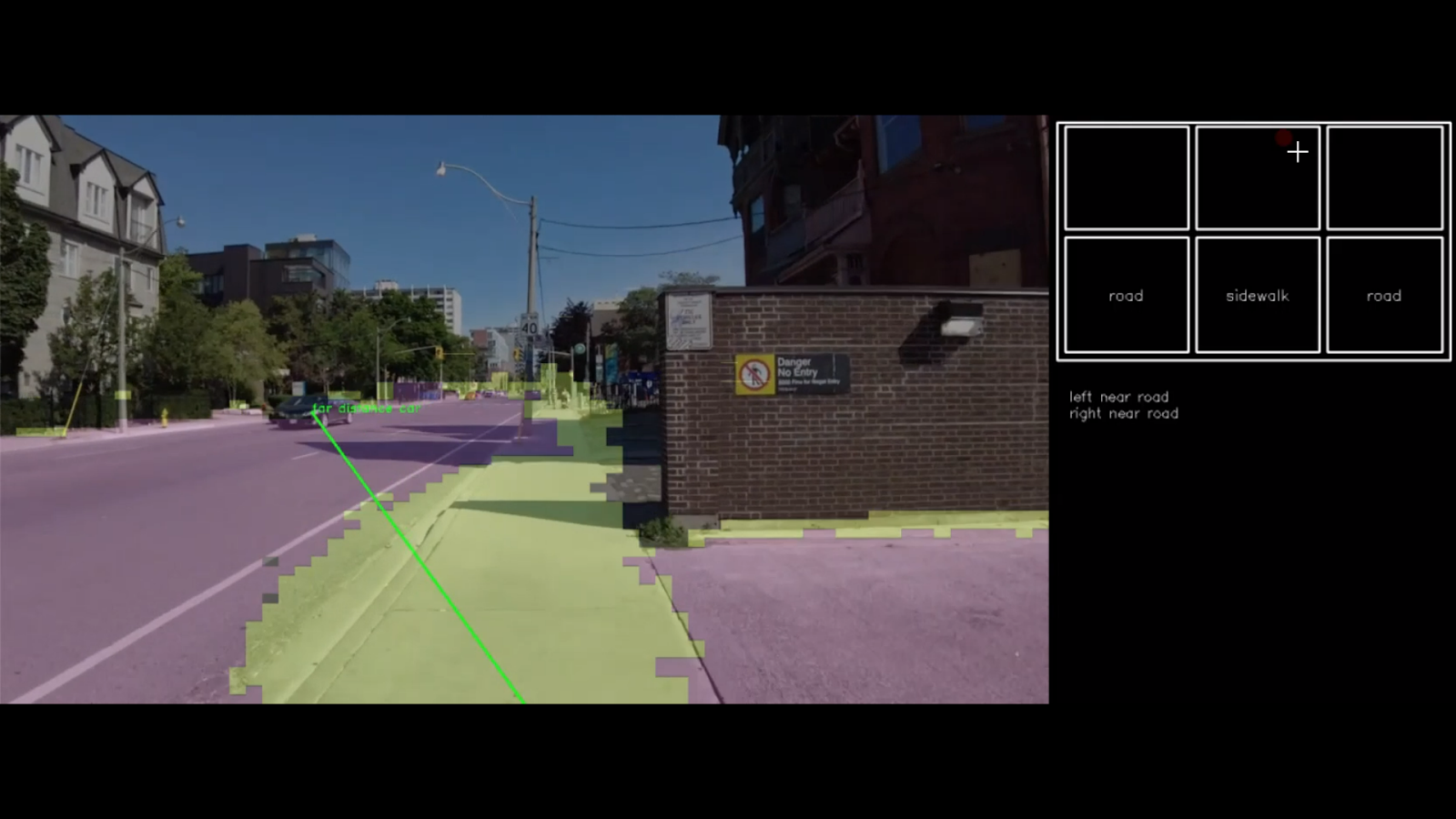

The application passes each captured frame from the camera to a segmenter and object detectors. The initial segmentation stage recognises large, static surfaces such as roads or sidewalks.

Example of detected segmented output

Note that the segmented output shown above is not displayed by the application because no display is connected to the output device.

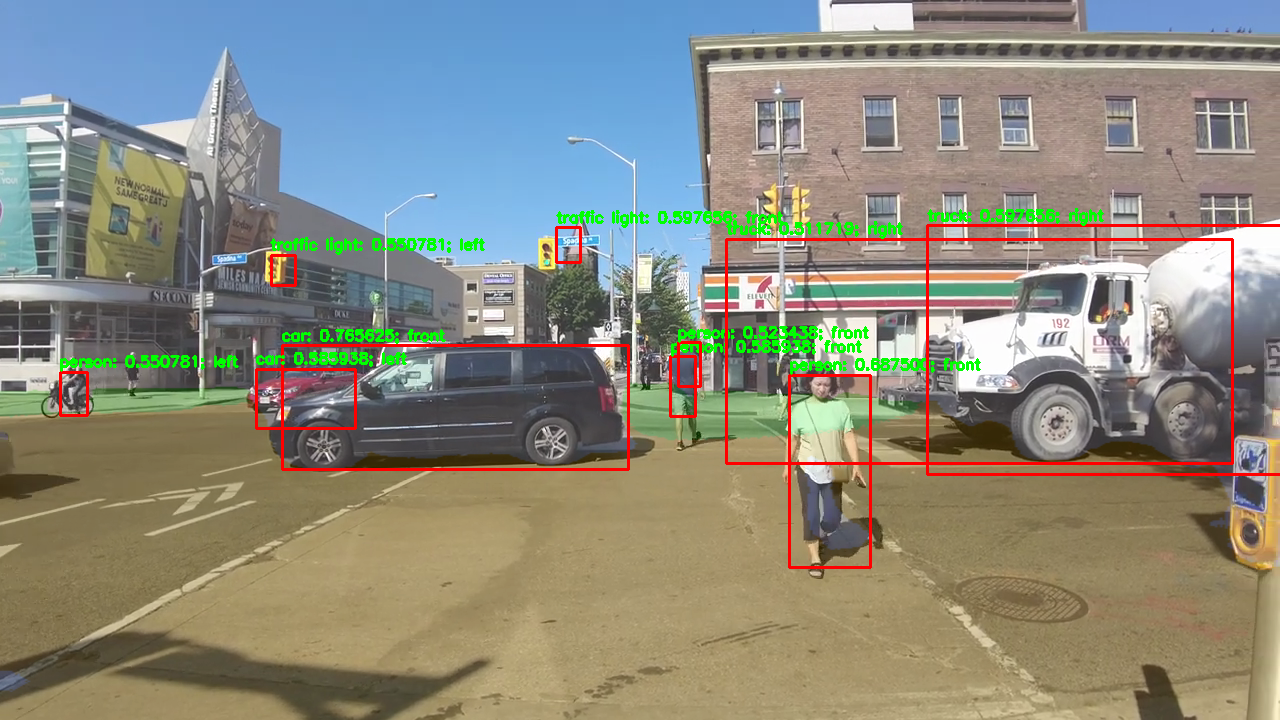

The subsequent detector stage is used for detecting dynamic, moving objects, such as vehicles and people. A crosswalk detector is implemented as the final stage in the pipeline. All detected items are prioritised based on proximity and potential hazard before being sent to the user.

Example of localised detection output

The segmentation and detection stages operate on RGB video data. Distance information is also provided by the stereo-depth camera. This information is used to alert the user to the proximity of detected objects by relaying information via an earpiece and through haptic feedback. To simplify presentation to the user, detected objects are identified as being on the left, on the right or straight ahead.

Detected objects are prioritised according to proximity and danger to the user. For each prioritised detection a complete set of information is presented to the user. This set of information refers to the classified object (for example, a car), the object’s location relative to the camera and the distance to the object.

Example of distance information for a prioritised object

The system uses Tensorflow and ONNX models for object detection. The target hardware is an ARM 64-based Raspberry Pi, which means that the Arm NN SDK can be used to accelerate the development of AI features.

Significant advances in embedded technology have made it realistic to introduce Edge AI applications, such as the one described above. The technology is small, cheap and powerful enough to justify using it in mainstream development.

At Digica, our embedded software team works together with our team of our AI experts to make such developments a reality.